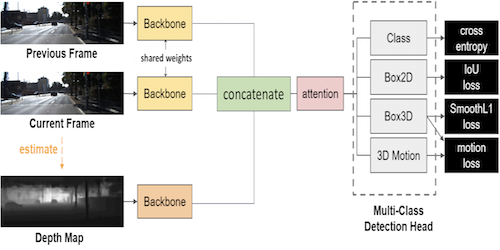

Low-level Sensor Fusion Network for 3D Vehicle Detection using Radar Range-Azimuth Heatmap and Monocular Image

Jinhyeong Kim ( Korea Advanced Institute of Science and Technology), Youngseok Kim (Korea Advanced Institute of Science and Technology (KAIST))*, Dongsuk Kum (Korea Advanced Institute of Science and Technology)

Keywords: Recognition: Feature Detection, Indexing, Matching, and Shape Representation

Abstract:

Robust and accurate object detection on roads with various objects is essential for automated driving. The radar has been employed in commercial advanced driver assistance systems (ADAS) for a decade due to its low-cost and high-reliability advantages. However, the radar has been used only in limited driving conditions such as highways to detect a few forwarding vehicles because of the limited performance of radar due to low resolution or poor classification. We propose a learning-based detection network using radar range-azimuth heatmap and monocular image in order to fully exploit the radar in complex road environments. We show that radar-image fusion can overcome the inherent weakness of the radar by leveraging camera information. Our proposed network has a two-stage architecture that combines radar and image feature representations rather than fusing each sensor's prediction results to improve detection performance over a single sensor. To demonstrate the effectiveness of the proposed method, we collected radar, camera, and LiDAR data in various driving environments in terms of vehicle speed, lighting conditions, and traffic volume. Experimental results show that the proposed fusion method outperforms the radar-only and the image-only method.

SlidesLive

Similar Papers

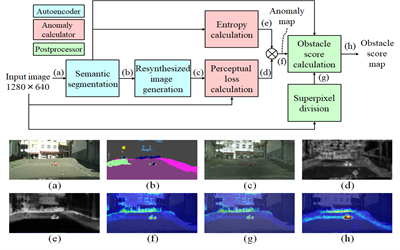

Road Obstacle Detection Method Based on an Autoencoder with Semantic Segmentation

Toshiaki Ohgushi (TOYOTA), Kenji Horiguchi (TOYOTA), Masao Yamanaka (TOYOTA)*

Semantic Synthesis of Pedestrian Locomotion

Maria Priisalu (Lund University)*, Ciprian Paduraru (IMAR), Aleksis Pirinen (Lund University), Cristian Sminchisescu (Lund University)

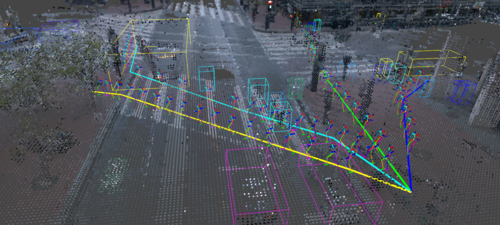

3D Object Detection from Consecutive Monocular Images

Chia-Chun Cheng (National Tsing Hua University)*, Shang-Hong Lai (Microsoft)