Active Learning for Video Description With Cluster-Regularized Ensemble Ranking

David M. Chan (University of California, Berkeley)*, Sudheendra Vijayanarasimhan (Google research), David A. Ross (Google), John F. Canny (UC Berkeley)

Keywords: Video Analysis and Event Recognition

Abstract:

Automatic video captioning aims to train models to generate text descriptions for all segments in a video, however, the most effective approaches require large amounts of manual annotation which is slow and expensive. Active learning is a promising way to efficiently build a training set for video captioning tasks while reducing the need to manually label uninformative examples. In this work we both explore various active learning approaches for automatic video captioning and show that a cluster-regularized ensemble strategy provides the best active learning approach to efficiently gather training sets for video captioning. We evaluate our approaches on the MSR-VTT and LSMDC datasets using both transformer and LSTM based captioning models and show that our novel strategy can achieve high performance while using up to 60% fewer training data than the strong state of the art random selection baseline.

SlidesLive

Similar Papers

Data-Efficient Ranking Distillation for Image Retrieval

Zakaria Laskar (Aalto University)*, Juho Kannala (Aalto University, Finland)

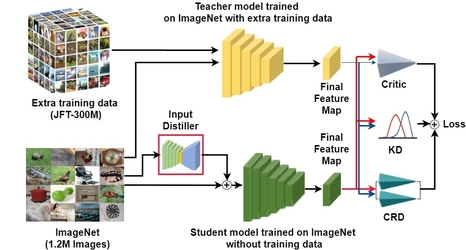

Compensating for the Lack of Extra Training Data by Learning Extra Representation

Hyeonseong Jeon (Sungkyunkwan University)*, Siho Han (Sungkyunkwan University), Sangwon Lee (SKKU), Simon S. Woo (SKKU)

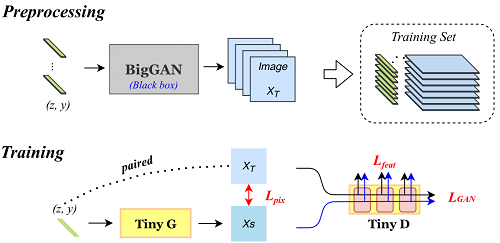

TinyGAN: Distilling BigGAN for Conditional Image Generation

Ting-Yun Chang (National Taiwan University)*, Chi-Jen Lu (Academia Sinica)