Compensating for the Lack of Extra Training Data by Learning Extra Representation

Hyeonseong Jeon (Sungkyunkwan University)*, Siho Han (Sungkyunkwan University), Sangwon Lee (SKKU), Simon S. Woo (SKKU)

Keywords: Datasets and Performance Analysis

Abstract:

Outperforming the previous state of the art, numerous deep learning models have been proposed for image classification using the ImageNet database. In most cases, significant improvement has been made through novel data augmentation techniques and learning or hyperparameter tuning strategies, leading to the advent of approaches such as FixNet, NoisyStudent, and Big Transfer. However, the latter examples, while achieving the state-of-the-art performance on ImageNet, required a significant amount of extra training data, namely the JFT-300M dataset. This dataset contains 300 million images, which is 250 times larger in sample numbers than ImageNet, but is publicly unavailable; the model pre-trained on it is available instead. In this paper, we introduce a novel framework, Extra Representation (ExRep), to surmount the problem of not having access to the JFT-300M data by instead using ImageNet and the publicly available model that has been pre-trained on JFT-300M. We take a knowledge distillation approach, treating the model pre-trained on JFT-300M as well as ImageNet as the teacher network and that pre-trained only on ImageNet as the student network. Our proposed method is capable of learning additional representation effects of the teacher model, bolstering the performance of the student model to a similar level to that of the teacher model, achieving high classification performance even without extra training data.

SlidesLive

Similar Papers

Data-Efficient Ranking Distillation for Image Retrieval

Zakaria Laskar (Aalto University)*, Juho Kannala (Aalto University, Finland)

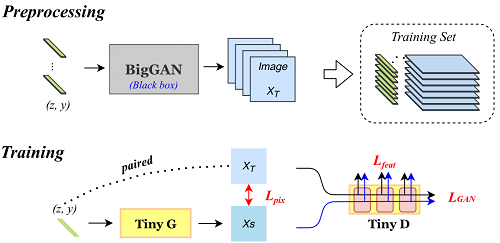

TinyGAN: Distilling BigGAN for Conditional Image Generation

Ting-Yun Chang (National Taiwan University)*, Chi-Jen Lu (Academia Sinica)

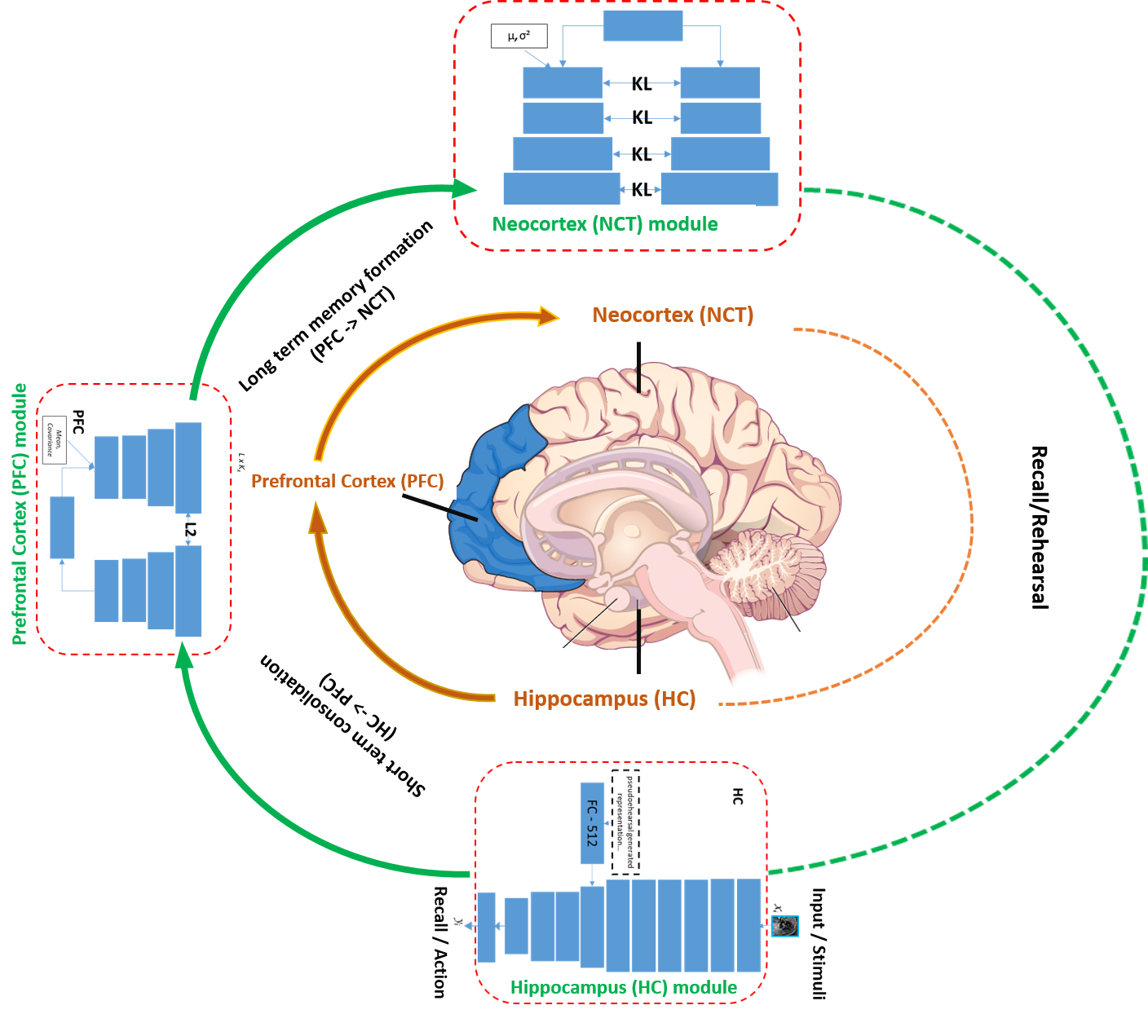

Learn more, forget less: Cues from human brain

Arijit Patra (University of Oxford), Tapabrata Chakraborti (University of Oxford)*