Learn more, forget less: Cues from human brain

Arijit Patra (University of Oxford), Tapabrata Chakraborti (University of Oxford)*

Keywords: Deep Learning for Computer Vision

Abstract:

Humans learn new information incrementally while consolidating old information at every stage in a lifelong learning process. While this appears perfectly natural for humans, the same task has proven to be challenging for learning machines. Deep neural networks are still prone to catastrophic forgetting of previously learnt information when presented with information from a sufficiently new distribution. To address this problem, we present NeoNet, a simple yet effective method that is motivated by recent findings in computational neuroscience on the process of long-term memory consolidation in humans. The network relies on a pseudorehearsal strategy to model the working of relevant sections of the brain that are associated with long-term memory consolidation processes. Experiments on benchmark classification tasks achieve state-of-the-art results that demonstrate the potential of the proposed method, with improvements in additions of novel information attained without requiring to store exemplars of past classes.

SlidesLive

Similar Papers

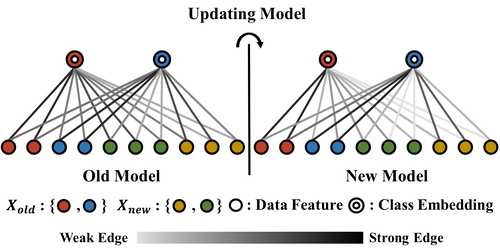

Class-incremental Learning with Rectified Feature-Graph Preservation

Cheng-Hsun Lei (National Chiao Tung University), Yi-Hsin Chen (National Chiao Tung University), Wen-Hsiao Peng (National Chiao Tung University), Wei-Chen Chiu (National Chiao Tung University)*

Data-Efficient Ranking Distillation for Image Retrieval

Zakaria Laskar (Aalto University)*, Juho Kannala (Aalto University, Finland)

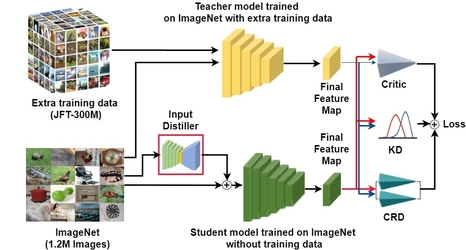

Compensating for the Lack of Extra Training Data by Learning Extra Representation

Hyeonseong Jeon (Sungkyunkwan University)*, Siho Han (Sungkyunkwan University), Sangwon Lee (SKKU), Simon S. Woo (SKKU)