SGNet: Semantics Guided Deep Stereo Matching

Shuya Chen (Zhejiang University), Zhiyu Xiang (Zhejiang University)*, Chengyu Qiao (Zhejiang University), Yiman Chen (Zhejiang University), Tingming Bai (Zhejiang University)

Keywords: 3D Computer Vision

Abstract:

Stereovision has been an intensive research area of computer vision. Based on deep learning, stereo matching networks are becoming popular in recent years. Despite of great progress, it's still challenging to achieve high accurate disparity map due to low texture and illumination changes in the scene. High-level semantic information can be helpful to handle these problems. In this paper a deep semantics guided stereo matching network (SGNet) is proposed. Apart from necessary semantic branch, three semantic guided modules are proposed to embed semantic constraints on matching. The joint confidence module produces confidence of cost volume based on the consistency of disparity and semantic features between left and right images. The residual module is responsible for optimizing the initial disparity results according to its semantic categories. Finally, in the loss module, the smooth of disparity is well supervised based on semantic boundary and region. The proposed network has been evaluated on various public datasets like KITTI 2015, KITTI 2012 and Virtual KITTI, and achieves the state-of-the-art performance.

SlidesLive

Similar Papers

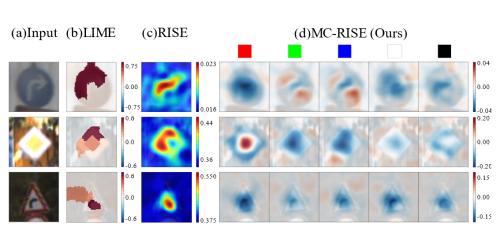

Visualizing Color-wise Saliency of Black-Box Image Classification Models

Yuhki Hatakeyama (SenseTime Japan)*, Hiroki Sakuma (SenseTime Japan), Yoshinori Konishi (SenseTime Japan), Kohei Suenaga (Kyoto University)

A Benchmark and Baseline for Language-Driven Image Editing

Jing Shi (University of Rochester)*, Ning Xu (Adobe Research), Trung Bui (Adobe Research), Franck Dernoncourt (Adobe Research), Zheng Wen (DeepMind), Chenliang Xu (University of Rochester)

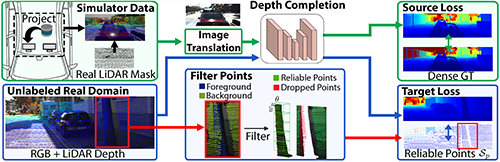

Project to Adapt: Domain Adaptation for Depth Completion from Noisy and Sparse Sensor Data

Adrian Lopez-Rodriguez (Imperial College London)*, Benjamin Busam (Technical University of Munich), Krystian Mikolajczyk (Imperial College London)