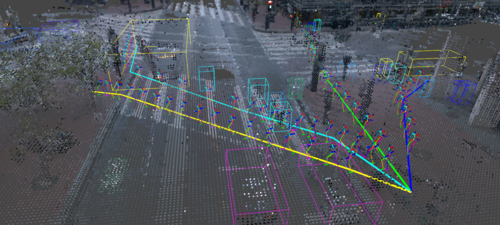

A Two-Stage Minimum Cost Multicut Approach to Self-Supervised Multiple Person Tracking

Kalun Ho (Fraunhofer ITWM)*, Amirhossein Kardoost (University of Mannheim), Franz-Josef Pfreundt (Fraunhofer ITWM), Janis Keuper (hs-offenburg), Margret Keuper (University of Mannheim)

Keywords: Motion and Tracking

Abstract:

Multiple Object Tracking (MOT) is a long-standing task in computer vision. Current approaches based on the tracking by detection paradigm either require some sort of domain knowledge or supervision to associate data correctly into tracks. In this work, we present a self-supervised multiple object tracking approach based on visual features and minimum cost lifted multicuts. Our method is based on straight-forward spatio-temporal cues that can be extracted from neighboring frames in an image sequences without supervision. Clustering based on these cues enables us to learn the required appearance invariances for the tracking task at hand and train an AutoEncoder to generate suitable latent representations. Thus, the resulting latent representations can serve as robust appearance cues for tracking even over large temporal distances where no reliable spatio-temporal features can be extracted. We show that, despite being trained without using the provided annotations, our model provides competitive results on the challenging MOT Benchmark for pedestrian tracking.

SlidesLive

Similar Papers

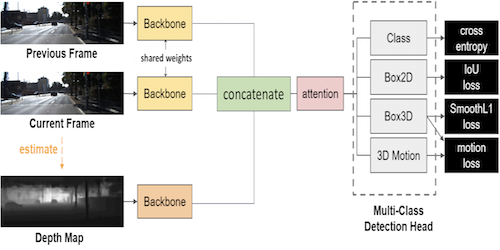

3D Object Detection from Consecutive Monocular Images

Chia-Chun Cheng (National Tsing Hua University)*, Shang-Hong Lai (Microsoft)

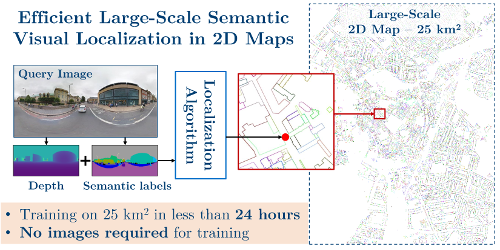

Efficient Large-Scale Semantic Visual Localization in 2D Maps

Tomas Vojir (CMP CTU)*, Ignas Budvytis (Department of Engineering, University of Cambridge), Roberto Cipolla (University of Cambridge)

Semantic Synthesis of Pedestrian Locomotion

Maria Priisalu (Lund University)*, Ciprian Paduraru (IMAR), Aleksis Pirinen (Lund University), Cristian Sminchisescu (Lund University)