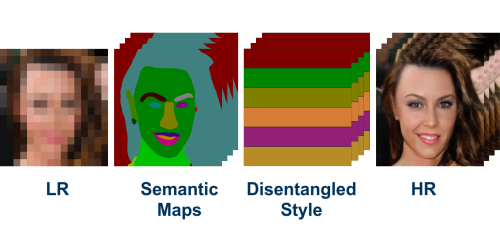

Multiple Exemplars-based Hallucination for Face Super-resolution and Editing

Kaili Wang (KU Leuven, UAntwerpen)*, Jose Oramas (UAntwerp, imec-IDLab), Tinne Tuytelaars (KU Leuven)

Keywords: Face, Pose, Action, and Gesture

Abstract:

Given a really low-resolution input image of a face (say 16�_6 or 8�_ pixels), the goal of this paper is to reconstruct a high-resolution version thereof. This, by itself, is an ill-posed problem, as the high-frequency information is missing in the low-resolution input and needs to be hallucinated, based on prior knowledge about the image content. Rather than relying on a generic face prior, in this paper, we explore the use of a set of exemplars, i.e. other high-resolution images of the same person. These guide the neural network as we condition the output on them. Multiple exemplars work better than a single one. To combine the information from multiple exemplars effectively, we intro-duce a pixel-wise weight generation module. Besides standard face super-resolution, our method allows to perform subtle face editing simply by replacing the exemplars with another set with different facial features. A user study is conducted and shows the super-resolved images can hardly be distinguished from real images on the CelebA dataset. A qualitative comparison indicates our model outperforms methods proposed in the literature on the CelebA and WebFace data.

SlidesLive

Similar Papers

DeepSEE: Deep Disentangled Semantic Explorative Extreme Super-Resolution

Marcel C. Bühler (ETH Zürich)*, Andrés Romero (ETH Zürich), Radu Timofte (ETH Zurich)

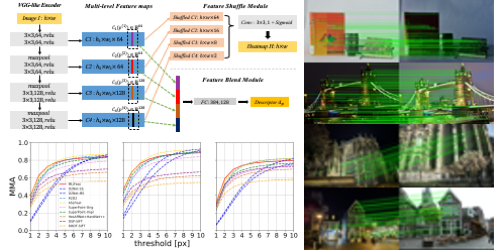

MLIFeat: Multi-level information fusion based deep local features

Yuyang Zhang (Institute of Automation, Chinese Academy of Sciences, University of Chinese Academy of Sciences), Jinge Wang (Megvii), Shibiao Xu (Institute of Automation, Chinese Academy of Sciences)*, Xiao Liu (Megvii Inc), Xiaopeng Zhang (Institute of Automation, Chinese Academy of Sciences)

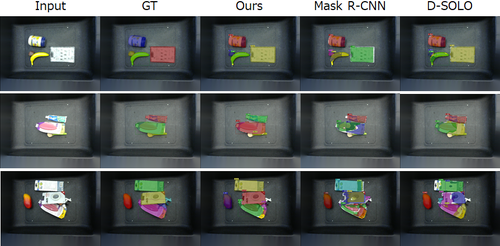

Point Proposal based Instance Segmentation with Rectangular Masks for Robot Picking Task

Satoshi Ito (Toshiba Corporation)*, Susumu Kubota (Toshiba Corporation)