Large-Scale Cross-Domain Few-Shot Learning

Jiechao Guan (Renmin University of China), Manli Zhang (Renmin University of China), Zhiwu Lu (Renmin University of China)*

Keywords: Big Data, Large Scale Methods

Abstract:

Learning classifiers for novel classes with a few training examples (shots) in a new domain is a practical problem setting. However, the two problems involved in this setting, few-shot learning (FSL) and domain adaption (DA), have only been studied separately so far. In this paper, for the first time, the problem of large-scale cross-domain few-shot learning is tackled. To overcome the dual challenges of few-shot and domain gap, we propose a novel Triplet Autoencoder (TriAE) model. The model aims to learn a latent subspace where not only transfer learning from the source classes to the novel classes occurs, but also domain alignment takes place. An efficient model optimization algorithm is formulated, followed by rigorous theoretical analysis. Extensive experiments on two large-scale cross-domain datasets show that our TriAE model outperforms the state-of-the-art FSL and domain adaptation models, as well as their naive combinations. Interestingly, under the conventional large-scale FSL setting, our TriAE model also outperforms existing FSL methods by significantly margins, indicating that domain gaps are universally present.

SlidesLive

Similar Papers

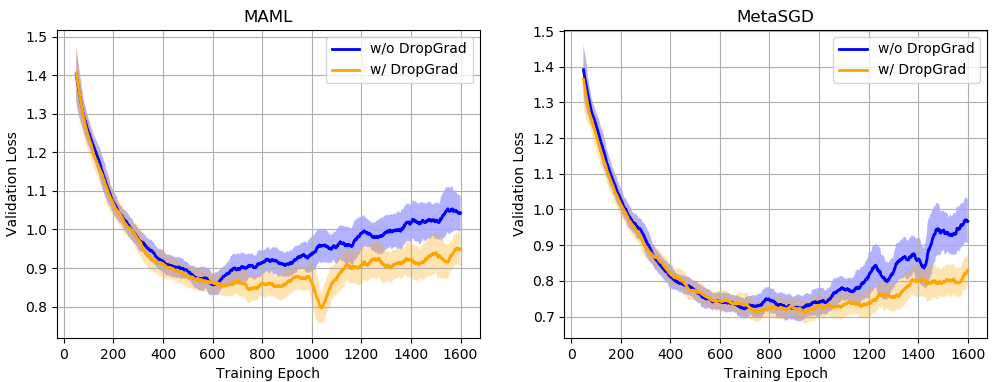

Regularizing Meta-Learning via Gradient Dropout

Hung-Yu Tseng (University of California, Merced)*, Yi-Wen Chen (University of California, Merced), Yi-Hsuan Tsai (NEC Labs America), Sifei Liu (NVIDIA), Yen-Yu Lin (National Chiao Tung University), Ming-Hsuan Yang (University of California at Merced)

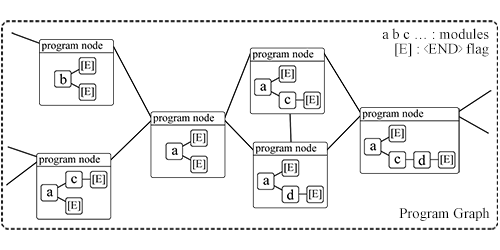

Graph-based Heuristic Search for Module Selection Procedure in Neural Module Network

Yuxuan Wu (The University of Tokyo)*, Hideki Nakayama (The University of Tokyo)

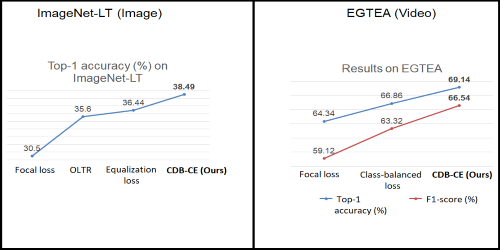

Class-Wise Difficulty-Balanced Loss for Solving Class-Imbalance

Saptarshi Sinha (Hitachi CRL)*, Hiroki Ohashi (Hitachi Ltd), Katsuyuki Nakamura (Hitachi Ltd.)