Class-Wise Difficulty-Balanced Loss for Solving Class-Imbalance

Saptarshi Sinha (Hitachi CRL)*, Hiroki Ohashi (Hitachi Ltd), Katsuyuki Nakamura (Hitachi Ltd.)

Keywords: Datasets and Performance Analysis

Abstract:

Class-imbalance is one of the major challenges in real world datasets where a few classes (called majority classes) constitute much more data samples than the rest (called minority classes). Learning deep neural networks using such datasets leads to performance which is typically biased towards the majority classes. Most of the prior works try to solve class-imbalance by assigning more weights to the minority classes in various manners (e.g., data re-sampling, cost-sensitive learning). However, we argue that the number of available training data may not be always a good clue to determine the weighting strategy because some of the minority classes might be sufficiently represented even by a small number of training data. Overweighting samples of such classes can lead to drop in the model’s overall performance. We claim that the ‘difficulty�_of a class as perceived by the model is more important to determine the weighting. In this light, we propose a novel loss function named Class-wise Difficulty-Balanced loss, or CDB loss, which dynamically distributes weights to each sample according to the difficulty of the class that the sample belongs to. Note that the assigned weights dynamically change as the ‘difficulty�_for the model may change with the learning progress. Extensive experiments are conducted on both image (artificially induced class-imbalanced MNIST, long-tailed CIFAR and ImageNet-LT) and video (EGTEA) datasets. The results show that CDB loss consistently outperforms the recently proposed loss functions on class-imbalanced datasets irrespective of the data type (i.e., video or image).

SlidesLive

Similar Papers

Imbalance Robust Softmax for Deep Embedding Learning

Hao Zhu (Australian National University)*, Yang Yuan (AnyVision), Guosheng Hu (AnyVision), Xiang Wu (Reconova), Neil Robertson (Queen's University Belfast)

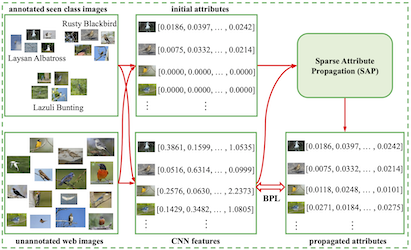

Few-Shot Zero-Shot Learning: Knowledge Transfer with Less Supervision

Nanyi Fei (Renmin University of China), Jiechao Guan (Renmin University of China), Zhiwu Lu (Renmin University of China)*, Yizhao Gao (Renmin University of China)

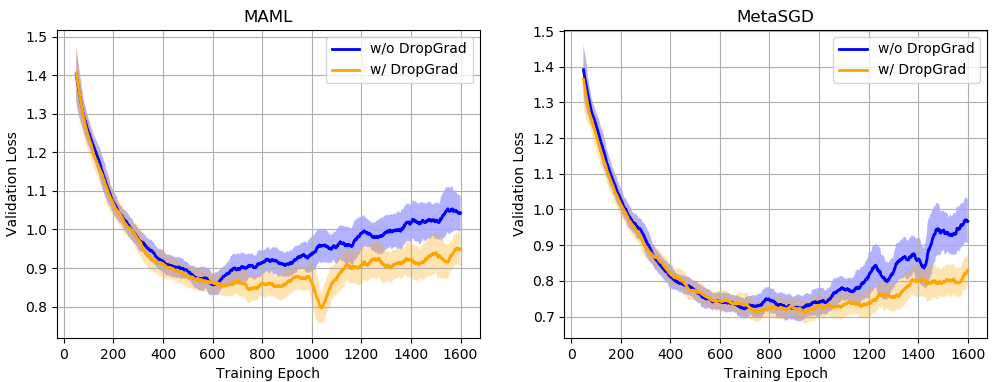

Regularizing Meta-Learning via Gradient Dropout

Hung-Yu Tseng (University of California, Merced)*, Yi-Wen Chen (University of California, Merced), Yi-Hsuan Tsai (NEC Labs America), Sifei Liu (NVIDIA), Yen-Yu Lin (National Chiao Tung University), Ming-Hsuan Yang (University of California at Merced)