Few-Shot Zero-Shot Learning: Knowledge Transfer with Less Supervision

Nanyi Fei (Renmin University of China), Jiechao Guan (Renmin University of China), Zhiwu Lu (Renmin University of China)*, Yizhao Gao (Renmin University of China)

Keywords: Statistical Methods and Learning

Abstract:

Existing zero-shot learning (ZSL) methods assume that there exist sufficient training samples from seen classes, each annotated with semantic descriptors such as attributes, for knowledge transfer to unseen classes without any training samples. However, this assumption is often invalid because collecting sufficient seen class samples can be difficult and attribute annotation is expensive; it thus severely limits the scalability of ZSL. In this paper, we define a new setting termed Few-Shot Zero-Shot Learning (FSZSL), where only a few annotated images are collected from each seen class (i.e., few-shot). This is clearly more challenging yet more realistic than the conventional ZSL setting. To overcome the resultant image-level attribute sparsity, we propose a novel inductive ZSL model termed sparse attribute propagation (SAP) by propagating attribute annotations to more unannotated images using sparse coding. This is followed by learning bidirectional projections between features and attributes for ZSL. An efficient solver is provided for such knowledge transfer with less supervision, together with rigorous theoretic analysis. With our SAP, we show that a ZSL training dataset can also be augmented by the abundant web images returned by image search engine, to further improve the model performance. Extensive experiments show that the proposed model achieves state-of-the-art results.

SlidesLive

Similar Papers

Imbalance Robust Softmax for Deep Embedding Learning

Hao Zhu (Australian National University)*, Yang Yuan (AnyVision), Guosheng Hu (AnyVision), Xiang Wu (Reconova), Neil Robertson (Queen's University Belfast)

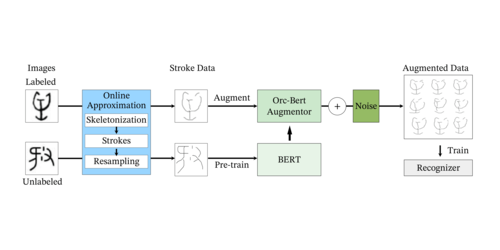

Self-supervised Learning of Orc-Bert Augmentator for Recognizing Few-Shot Oracle Characters

Wenhui Han (Fudan University), Xinlin Ren (Fudan University), Hangyu Lin (Fudan University), Yanwei Fu (Fudan University)*, Xiangyang Xue (Fudan University)

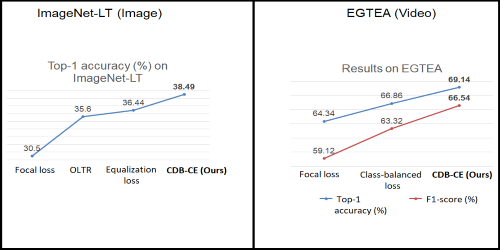

Class-Wise Difficulty-Balanced Loss for Solving Class-Imbalance

Saptarshi Sinha (Hitachi CRL)*, Hiroki Ohashi (Hitachi Ltd), Katsuyuki Nakamura (Hitachi Ltd.)