FreezeNet: Full Performance by Reduced Storage Costs

Paul Wimmer (Luebeck University / Robert Bosch GmbH)*, Jens Mehnert (Robert Bosch GmbH), Alexandru Condurache (Bosch)

Keywords: Datasets and Performance Analysis; Optimization Methods; Statistical Methods and Learning

Abstract:

Pruning generates sparse networks by setting parameters tozero. In this work we improve one-shot pruning methods, applied beforetraining, without adding any additional storage costs while preservingthe sparse gradient computations. The main difference to pruning is thatwe do not sparsify the network’s weights but learn just a few key parame-ters and keep the other ones fixed at their random initialized value. Thismechanism is called freezing the parameters. Those frozen weights canbe stored efficiently with a single 32bit random seed number. The pa-rameters to be frozen are determined one-shot by a single for- and back-ward pass applied before training starts. We call the introduced methodFreezeNet. In our experiments we show that FreezeNets achieve good re-sults, especially for extreme freezing rates. Freezing weights preserves thegradient flow throughout the network and consequently, FreezeNets trainbetter and have an increased capacity compared to their pruned counter-parts. On the classification tasks MNIST and CIFAR-10/100 we outper-form SNIP, in this setting the best reported one-shot pruning method,applied before training. On MNIST, FreezeNet achieves 99.2% perfor-mance of the baseline LeNet-5-Caffe architecture, while compressing thenumber of trained and stored parameters by a factor of �_57.

SlidesLive

Similar Papers

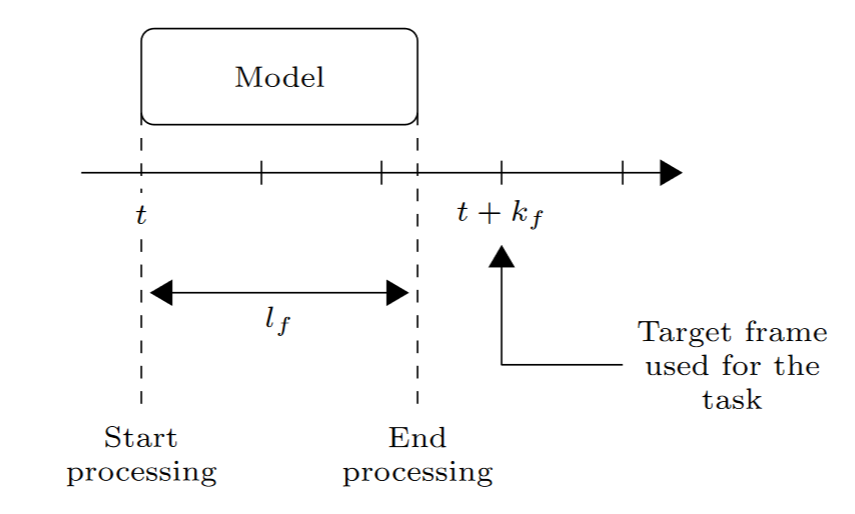

Real-Time Segmentation Networks should be Latency Aware

Evann Courdier (Idiap Research Institute)*, François Fleuret (University of Geneva)

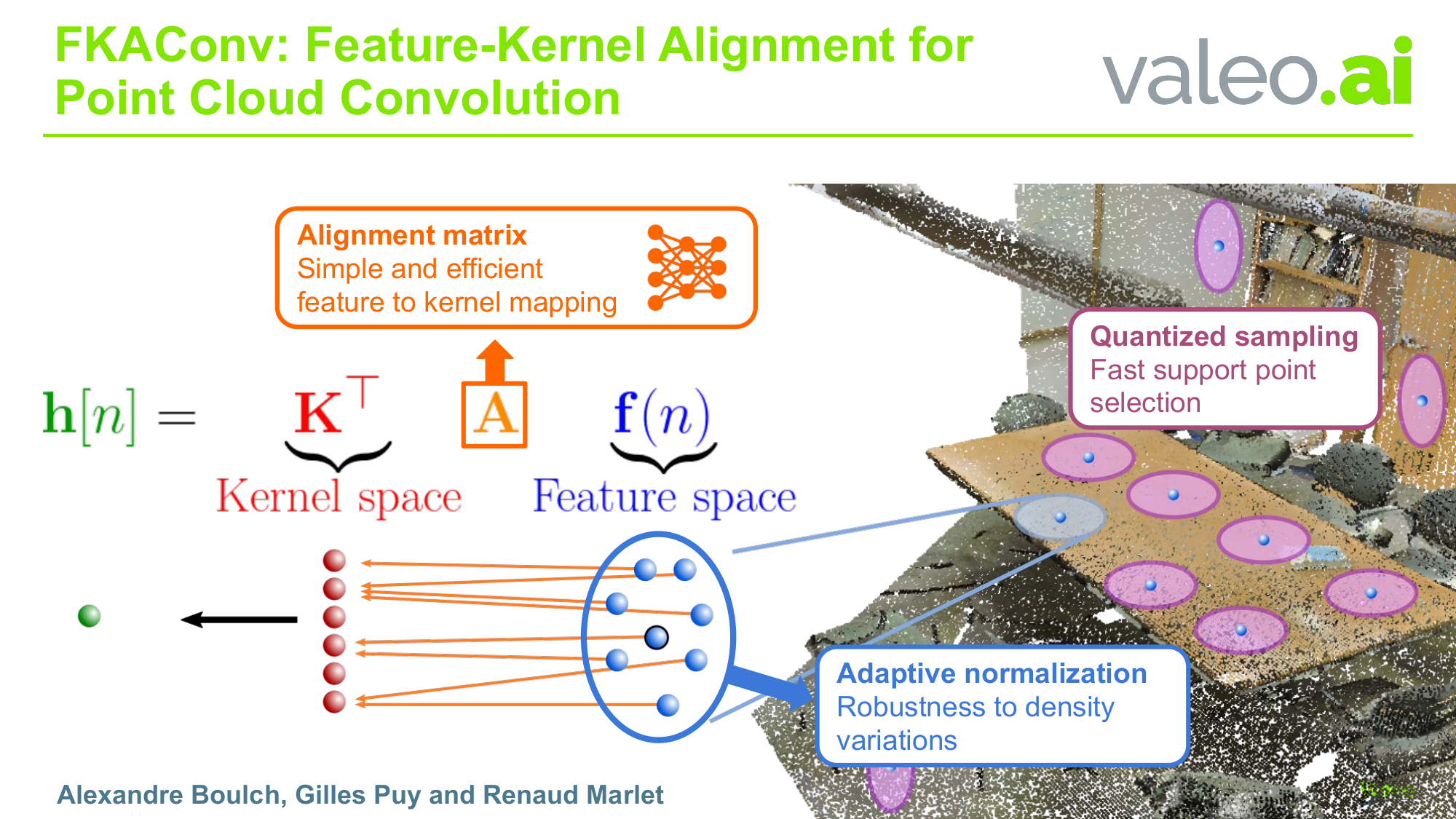

FKAConv: Feature-Kernel Alignment for Point Cloud Convolution

Alexandre Boulch (valeo.ai)*, Gilles Puy (Valeo), Renaud Marlet (Ecole des Ponts ParisTech)

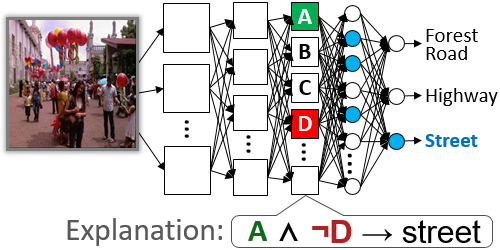

ERIC: Extracting Relations Inferred from Convolutions

Joe Townsend (Fujitsu Laboratories of Europe LTD)*, Theodoros Kasioumis (Fujitsu Laboratories of Europe LTD), Hiroya Inakoshi (Fujitsu Laboratories of Europe)