Weakly-supervised Reconstruction of 3D Objects with Large Shape Variation from Single In-the-Wild Images

Shichen Sun (Sichuan University), Zhengbang Zhu (Sichuan University), Xiaowei Dai (Sichuan University), Qijun Zhao (Sichuan University)*, Jing Li (Sichuan University)

Keywords: 3D Computer Vision

Abstract:

Existing unsupervised 3D object reconstruction methods can not work well if the shape of objects varies substantially across images or if the images have distracting background. This paper proposes a novel learning framework for reconstructing 3D objects with large shape variation from single in-the-wild images. Considering that shape variation leads to appearance change of objects at various scales, we propose a fusion module to form combined multi-scale image features for 3D reconstruction. To deal with the ambiguity caused by shape variation, we propose side-output mask constraint to supervise the feature extraction, and adaptive edge constraint and initial shape constraint to supervise the shape reconstruction. Moreover, we propose background manipulation to augment the training images such that the obtained model is robust to background distraction. Extensive experiments have been done for both non-rigid objects (birds) and rigid objects (planes and vehicles), and the results prove the superiority of the proposed method.

SlidesLive

Similar Papers

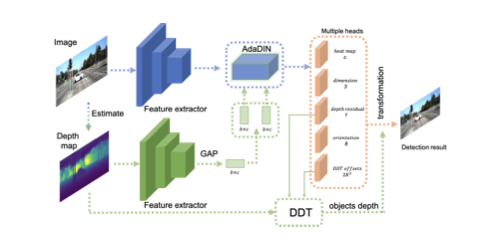

Dynamic Depth Fusion and Transformation for Monocular 3D Object Detection

Erli Ouyang (Fudan University)*, Li Zhang (University of Oxford), Mohan Chen (Fudan University), Anurag Arnab (University of Oxford), Yanwei Fu (Fudan University)

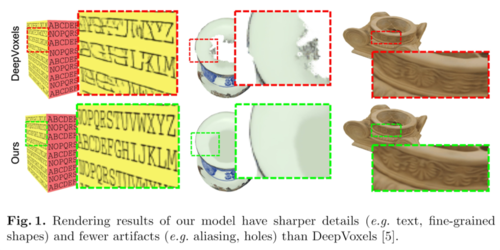

DeepVoxels++: Enhancing the Fidelity of Novel View Synthesis from 3D Voxel Embeddings

Tong He (UCLA)*, John Collomosse (Adobe Research), Hailin Jin (Adobe Research), Stefano Soatto (UCLA)

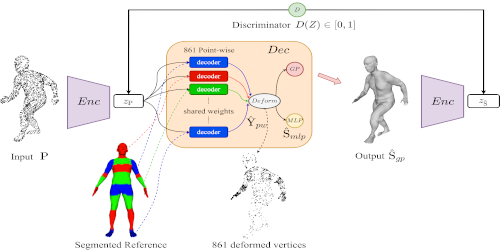

Reconstructing Human Body Mesh from Point Clouds by Adversarial GP Network

Boyao Zhou (Inria)*, Jean-Sebastien Franco (INRIA), Federica Bogo (Microsoft), Bugra Tekin (Microsoft), Edmond Boyer (Inria)