Homography-based Egomotion Estimation Using Gravity and SIFT Features

Yaqing Ding (Nanjing University of Science and Technology)*, Daniel Barath (MTA SZTAKI, CMP Prague), Zuzana Kukelova (Czech Technical University in Prague)

Keywords: 3D Computer Vision

Abstract:

Camera systems used, e.g., in cars, UAVs, smartphones, and tablets, are typically equipped with IMUs (inertial measurement units) that can measure the gravity vector. Using the information from an IMU, the y-axes of cameras can be aligned with the gravity, reducing their relative orientation to a single DOF (degree of freedom). In this paper, we use the gravity information to derive extremely efficient minimal solvers for homography-based egomotion estimation from orientation- and scale-covariant features. We use the fact that orientation- and scale-covariant features, such as SIFT or ORB, provide additional constraints on the homography. Based on the prior knowledge about the target plane (horizontal/vertical/general plane, w.r.t. the gravity direction) and using the SIFT/ORB constraints, we derive new minimal solvers that require fewer correspondences than traditional approaches and, thus, speed up the robust estimation procedure significantly. The proposed solvers are compared with the state-of-the-art point-based solvers on both synthetic data and real images, showing comparable accuracy and significant improvement in terms of speed. The implementation of our solvers is available at https://github.com/yaqding/relativepose-sift-gravity.

SlidesLive

Similar Papers

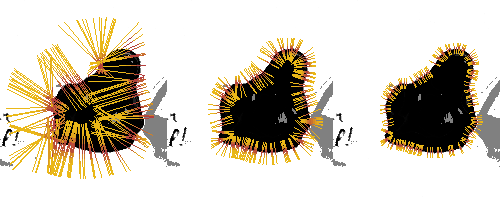

A Sparse Gaussian Approach to Region-Based 6DoF Object Tracking

Manuel Stoiber (German Aerospace Center (DLR))*, Martin Pfanne (German Aerospace Center), Klaus H. Strobl (DLR), Rudolph Triebel (German Aerospace Center (DLR)), Alin Albu-Schaeffer (Robotics and Mechatronics Center (RMC), German Aerospace Center (DLR))

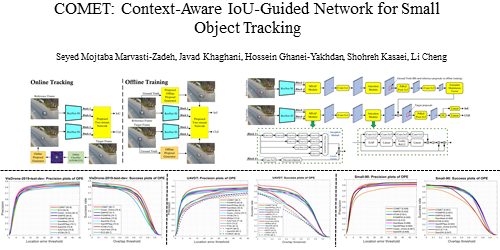

COMET: Context-Aware IoU-Guided Network for Small Object Tracking

Seyed Mojtaba Marvasti-Zadeh (University of Alberta)*, Javad Khaghani (University of Alberta), Hossein Ghanei-Yakhdan (Yazd University), Shohreh Kasaei (Sharif University of Technology), Li Cheng (ECE dept., University of Alberta)

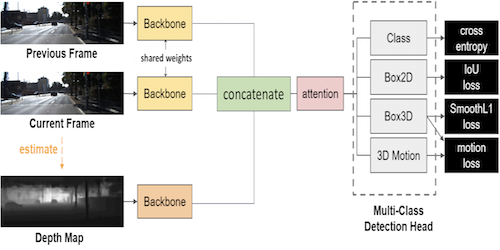

3D Object Detection from Consecutive Monocular Images

Chia-Chun Cheng (National Tsing Hua University)*, Shang-Hong Lai (Microsoft)