Towards Fast and Robust Adversarial Training for Image Classification

Erh-Chung Chen (National Tsing Hua University)*, Che-Rung Lee (National Tsing Hua University )

Keywords: Statistical Methods and Learning

Abstract:

The adversarial training, which augments the training data with adversarial examples, is one of the most effective methods to defend adversarial attacks. However, its robustness degrades for complex models, and the producing of strong adversarial examples is a time-consuming task. In this paper, we proposed methods to improve the robustness and efficiency of the adversarial training. First, we utilized a re-constructor to enforce the classifier to learn the important features under perturbations. Second, we employed the enhanced FGSM to generate adversarial examples effectively. It can detect overfitting and stop training earlier without extra cost. Experiments are conducted on MNIST and CIFAR10 to validate the effectiveness of our methods. We also compared our algorithm with the state-of-the-art defense methods. The results show that our algorithm is 4-5 times faster than the previously fastest training method. For CIFAR-10, our method can achieve above 46\% robust accuracy, which is better than most of other methods.

SlidesLive

Similar Papers

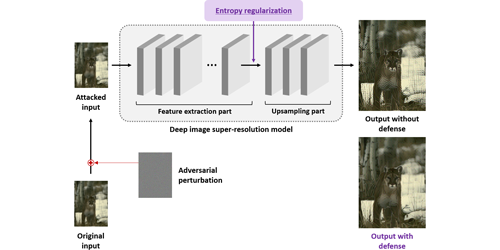

Adversarially Robust Deep Image Super-Resolution using Entropy Regularization

Jun-Ho Choi (Yonsei University), Huan Zhang (UCLA), Jun-Hyuk Kim (Yonsei University), Cho-Jui Hsieh (UCLA), Jong-Seok Lee ("Yonsei University, Korea")*

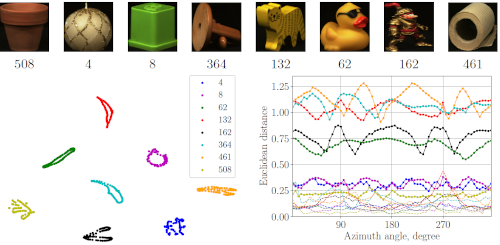

Learning Local Feature Descriptors for Multiple Object Tracking

Dmytro Borysenko (Samsung R&D Institute Ukraine), Dmytro Mykheievskyi (Samsung R&D Institute Ukraine), Viktor Porokhonskyy (Samsung Research&Development Institute Ukraine (SRK))*

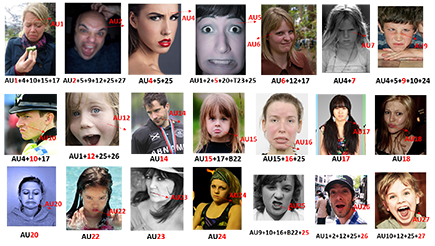

RAF-AU Database: In-the-Wild Facial Expressions with Subjective Emotion Judgement and Objective AU Annotations

Wen-Jing Yan (JD Digits)*, Shan Li (Beijing University of Posts and Telecommunications), Chengtao Que (JD Digits), Jiquan Pei (JD Digits), Weihong Deng (Beijing University of Posts and Telecommunications)