Dense-Scale Feature Learning in Person Re-Identification

Li Wang (Inspur), Baoyu Fan (Inspur Electronic Information Industry Co.,Ltd.)*, Zhenhua Guo (Inspur Electronic Information Industry Co.,Ltd.), Yaqian Zhao (Inspur), Runze Zhang (Inspur Electronic Information Industry Co.,Ltd.), Rengang Li (Inspur), Weifeng Gong ( Inspur Electronic Information Industry Co.,Ltd.)

Keywords: Applications of Computer Vision, Vision for X

Abstract:

For mass pedestrians re-identification (Re-ID), models must be capable of representing extremely complex and diverse multi-scale features. However, existing models only learn limited multi-scale features in a multi-branches manner, and directly expanding the number of scale branches for more scales will confuse the discrimination and affect performance. Because for a specific input image, there are a few scale features that are critical. In order to fulfill vast scale representation for person Re-ID and solve the contradiction of excessive scale declining performance, we proposed a novel Dense-Scale Feature Learning Network (DSLNet) which consist of two core components: Dense Connection Group (DCG) for providing abundant scale features, and Channel-Wise Scale Selection (CSS) module for dynamic select the most discriminative scale features to each input image. DCG is composed of a densely connected convolutional stream. The receptive field gradually increases as the feature flows along the convolution stream. Dense shortcut connections provide much more fused multi-scale features than existing methods. CSS is a novel attention module different from any existing model which calculates attention along the branch direction. By enhancing or suppressing specific scale branches, truly channel-wised multi-scale selection is realized. To the best of our knowledge, DSLNet is most lightweight and achieves state-of-the-art performance among lightweight models on four commonly used Re-ID datasets, surpassing most large-scale models.

SlidesLive

Similar Papers

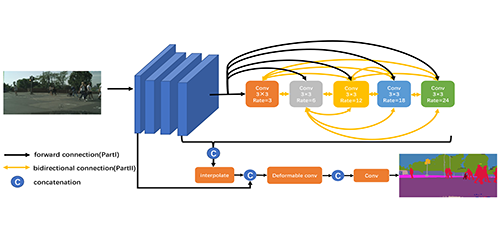

Learning More Accurate Features for Semantic Segmentation in CycleNet

Linzi Qu (Xidian University)*, Lihuo He (Xidian University), JunJie Ke (Xidian University), Xinbo Gao (Xidian University), Wen Lu (Xidian University)

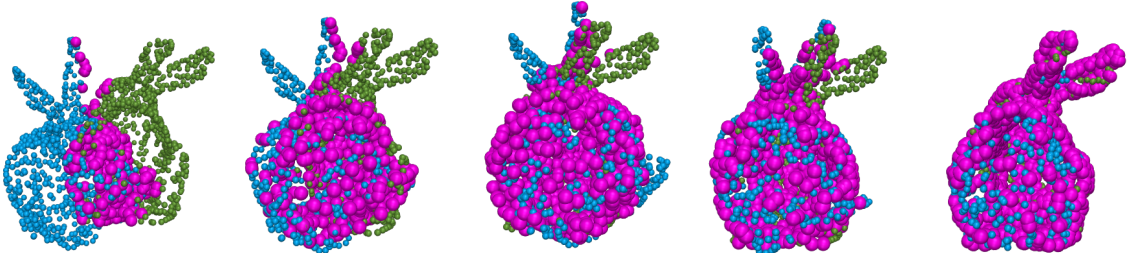

Best Buddies Registration for Point Clouds

Amnon Drory (Tel-Aviv University)*, Tal Shomer (Tel-Aviv University), Shai Avidan (Tel Aviv University), Raja Giryes (Tel Aviv University)

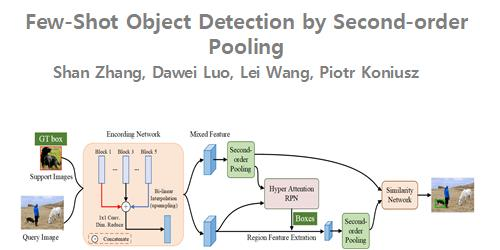

Few-Shot Object Detection by Second-order Pooling

Shan Zhang (ANU, Beijing Union University)*, Dawei Luo (Beijing Key Laboratory of Information Service Engineering, Beijing Union University ), Lei Wang ("University of Wollongong, Australia"), Piotr Koniusz (Data61/CSIRO, ANU)