Multi-View Consistency Loss for Improved Single-Image 3D Reconstruction of Clothed People

Akin Caliskan (Center for Vision Speech and Signal Processing - University of Surrey)*, Armin Mustafa (University of Surrey), Evren Imre (Vicon), Adrian Hilton (University of Surrey)

Keywords: 3D Computer Vision

Abstract:

We present a novel method to improve the accuracy of the 3D reconstruction of clothed human shape from a single image. Recent work has introduced volumetric, implicit and model-based shape learning frameworks for reconstruction of objects and people from one or more images. However, the accuracy and completeness for reconstruction of clothed people is limited due to the large variation in shape resulting from clothing, hair, body size, pose and camera viewpoint. This paper introduces two advances to overcome this limitation: firstly a new synthetic dataset of realistic clothed people, 3DVH;and secondly, a novel multiple-view loss function for training of monocular volumetric shape estimation, which is demonstrated to significantly improve generalisation and reconstruction accuracy. The 3DVH dataset of realistic clothed 3D human models rendered with diverse natural backgrounds is demonstrated to allows transfer to reconstruction from real images of people. Comprehensive comparative performance evaluation on both synthetic and real images of people demonstrates that the proposed method significantly outperforms the previous state-of-the-art learning-based single image 3D human shape estimation approaches achieving significant improvement of reconstruction accuracy, completeness, and quality. An ablation study shows that this is due to both the proposed multiple-view training and the new 3DVH dataset. The code and the dataset can be found at the project website: https://akincaliskan3d.github.io/MV3DH/.

SlidesLive

Similar Papers

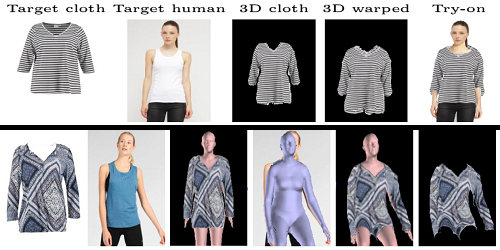

CloTH-VTON: Clothing Three-dimensional reconstruction for Hybrid image-based Virtual Try-ON

Matiur Rahman Minar (Seoul National University of Science and Technology), Heejune Ahn (Seoul National Univ. of Science and Technology)*

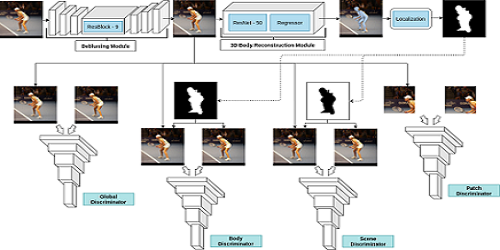

Human Motion Deblurring using Localized Body Prior

Jonathan Samuel Lumentut (Inha University), Joshua Santoso (Inha University), In Kyu Park (Inha University)*

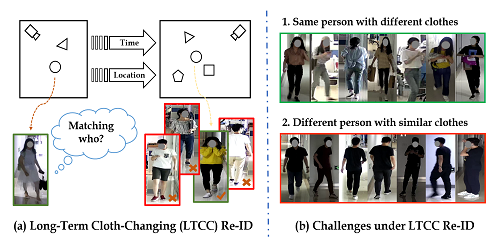

Long-Term Cloth-Changing Person Re-identification

Xuelin Qian (Fudan University), Wenxuan Wang (Fudan University), Li Zhang (University of Oxford), Fangrui Zhu (Fudan University), Yanwei Fu (Fudan University)*, Tao Xiang (University of Surrey), Yu-Gang Jiang (Fudan University), Xiangyang Xue (Fudan University)