CloTH-VTON: Clothing Three-dimensional reconstruction for Hybrid image-based Virtual Try-ON

Matiur Rahman Minar (Seoul National University of Science and Technology), Heejune Ahn (Seoul National Univ. of Science and Technology)*

Keywords: Applications of Computer Vision, Vision for X

Abstract:

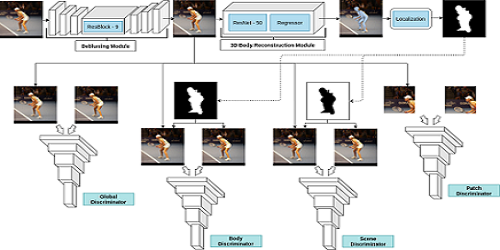

Virtual clothing try-on, transferring a clothing image onto a target person image, is drawing industrial and research attention. Both 2D image-based and 3D model-based methods proposed recently have their benefits and limitations. Whereas 3D model-based methods provide realistic deformations of the clothing, it needs a difficult 3D model construction process and cannot handle the non-clothing areas well. Image-based deep neural network methods are good at generating disclosed human parts, retaining the unchanged area, and blending image parts, but cannot handle large deformation of clothing. In this paper, we propose CloTH-VTON that utilizes the high-quality image synthesis of 2D image-based methods and the 3D model-based deformation to the target human pose. For this 2D and 3D combination, we propose a novel 3D cloth reconstruction method from a single 2D cloth image, leveraging a 3D human body model, and transfer to the shape and pose of the target person. Our cloth reconstruction method can be easily applied to diverse cloth categories. Our method produces final try-on output with naturally deformed clothing and preserving details in high resolution.

SlidesLive

Similar Papers

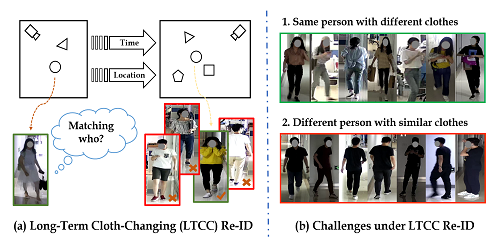

Long-Term Cloth-Changing Person Re-identification

Xuelin Qian (Fudan University), Wenxuan Wang (Fudan University), Li Zhang (University of Oxford), Fangrui Zhu (Fudan University), Yanwei Fu (Fudan University)*, Tao Xiang (University of Surrey), Yu-Gang Jiang (Fudan University), Xiangyang Xue (Fudan University)

Multi-View Consistency Loss for Improved Single-Image 3D Reconstruction of Clothed People

Akin Caliskan (Center for Vision Speech and Signal Processing - University of Surrey)*, Armin Mustafa (University of Surrey), Evren Imre (Vicon), Adrian Hilton (University of Surrey)

Human Motion Deblurring using Localized Body Prior

Jonathan Samuel Lumentut (Inha University), Joshua Santoso (Inha University), In Kyu Park (Inha University)*