Hierarchical X-Ray Report Generation via Pathology tags and Multi Head Attention

Preethi Srinivasan (IIT Mandi), Daksh Thapar (Indian Institute of Technology, Mandi)*, Arnav Bhavsar (IIT Mandi), Aditya Nigam (IIT mandi)

Keywords: Biomedical Image Analysis

Abstract:

Examining radiology images, such as X-Ray images as accurately as possible, forms a crucial step in providing the best healthcare facilities. However, this requires high expertise and clinical experience. Even for experienced radiologists, this is a time-consuming task. Hence, the automated generation of accurate radiology reports from chest X-Ray images is gaining popularity. Compared to other image captioning tasks where coherence is the key criterion, medical image captioning requires high accuracy in detecting anomalies and extracting information along with coherence. That is, the report must be easy to read and convey medical facts accurately. We propose a deep neural network to achieve this. Given a set of Chest X-Ray images of the patient, the proposed network predicts the medical tags and generates a readable radiology report. For generating the report and tags, the proposed network learns to extract salient features of the image from a deep CNN and generates tag embeddings for each patient's X-Ray images. We use transformers for learning self and cross attention. We encode the image and tag features with self-attention to get a finer representation. Use both the above features in cross attention with the input sequence to generate the report's Findings. Then, cross attention is applied between the generated Findings and the input sequence to generate the report's Impressions. We use a publicly available dataset to evaluate the proposed network. The performance indicates that we can generate a readable radiology report, with a relatively higher BLEU score over SOTA. The code and trained models are available at https://medicalcaption.github.io

SlidesLive

Similar Papers

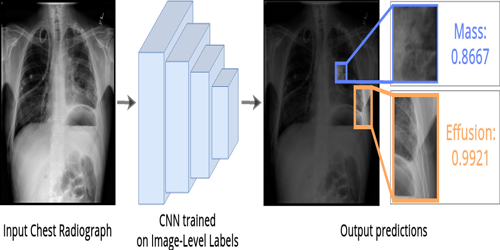

Self-Guided Multiple Instance Learning forWeakly Supervised Thoracic DiseaseClassification and Localizationin Chest Radiographs

Constantin Seibold (Karlsruhe Institute of Technology)*, Jens Kleesiek (German Cancer Research Center), Heinz-Peter Schlemmer (German Cancer Research Center), Rainer Stiefelhagen (Karlsruhe Institute of Technology)

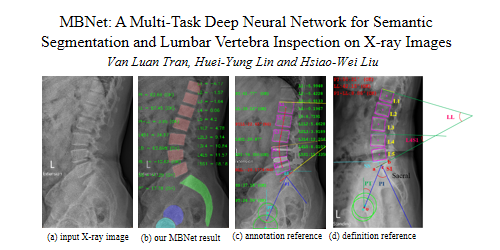

MBNet: A Multi-Task Deep Neural Network for Semantic Segmentation and Lumbar Vertebra Inspection on X-ray Images

Van Luan Tran (National Chung Cheng University)*, Huei-Yung Lin (National Chung Cheng University), Hsiao-Wei Liu (Industrial Technology Research Institute (ITRI))

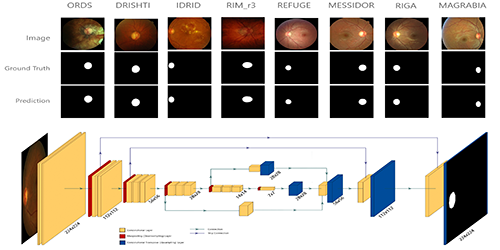

Utilizing Transfer Learning and a Customized Loss Function for Optic Disc Segmentation from Retinal Images

Abdullah Sarhan (University of Calgary)*, Ali Al-Khaz'Aly (University of Calgary), Adam Gorner (University of Calgary), Andrew Swift (University of Calgary), Jon Rokne (University of Calgary), Reda Alhajj (University of Calgary), Andrew Crichton (University of Calgary)