Pre-training without Natural Images

Hirokatsu Kataoka (National Institute of Advanced Industrial Science and Technology (AIST))*, Kazushige Okayasu (National Institute of Advanced Industrial Science and Technology (AIST)), Asato Matsumoto (National Institute of Advanced Industrial Science and Technology (AIST)), Eisuke Yamagata (Tokyo Institute of Technology), Ryosuke Yamada (Tokyo Denki University), Nakamasa Inoue (Tokyo Institute of Technology), Akio Nakamura (Tokyo Denki University (TDU)), Yutaka Satoh (National Institute of Advanced Industrial Science and Technology (AIST))

Keywords: Datasets and Performance Analysis

Abstract:

Is it possible to use convolutional neural networks pre-trained without any natural images to assist natural image understanding? The paper proposes a novel concept, Formula-driven Supervised Learning. We automatically generate image patterns and their category labels by assigning fractals, which are based on a natural law existing in the background knowledge of the real world. Theoretically, the use of automatically generated images instead of natural images in the pre-training phase allows us to generate an infinite scale dataset of labeled images. Although the models pre-trained with the proposed Fractal DataBase (FractalDB), a database without natural images, does not necessarily outperform models pre-trained with human annotated datasets at all settings, we are able to partially surpass the accuracy of ImageNet/Places pre-trained models. The image representation with the proposed FractalDB captures a unique feature in the visualization of convolutional layers and attentions.

SlidesLive

Similar Papers

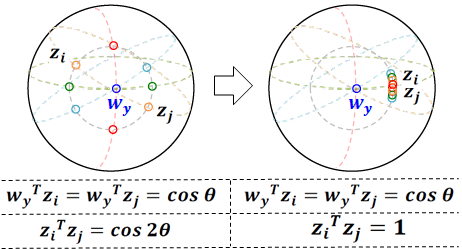

DiscFace: Minimum Discrepancy Learning for Deep Face Recognition

Insoo Kim (Samsung Advanced Institute of Technology)*, Seungju Han (Samsung Advanced Institute of Technology), Seong-Jin Park (Samsung Advanced Institute of Technology), Ji-won Baek (Samsung Advanced Institute of Technology), Jinwoo Shin (KAIST), Jae-Joon Han (Samsung), Changkyu Choi (Samsung)

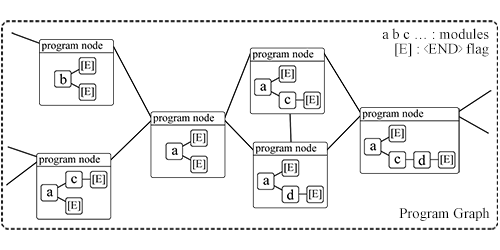

Graph-based Heuristic Search for Module Selection Procedure in Neural Module Network

Yuxuan Wu (The University of Tokyo)*, Hideki Nakayama (The University of Tokyo)

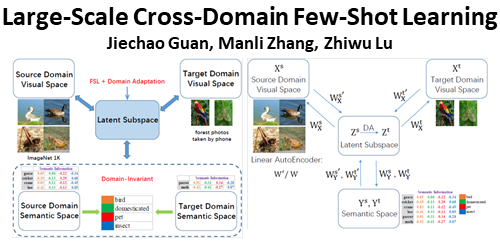

Large-Scale Cross-Domain Few-Shot Learning

Jiechao Guan (Renmin University of China), Manli Zhang (Renmin University of China), Zhiwu Lu (Renmin University of China)*