Lightweight Single-Image Super-Resolution Network with Attentive Auxiliary Feature Learning

Xuehui Wang (School of Data and Computer Science, Sun Yat-sen University), qing wang (School of Data and Computer Science, Sun Yat-sen University), Yuzhi Zhao (City University of Hong Kong), Junchi Yan (Shanghai Jiao Tong University), Lei Fan (Northwestern University), long chen (School of Data and Computer Science, Sun Yat-sen University)*

Keywords: Low-level Vision, Image Processing

Abstract:

Despite convolutional network-based methods have boosted the performance of single image super-resolution (SISR), the huge computation costs restrict their practical applicability. In this paper, we develop a computation efficient yet accurate network based on the proposed attentive auxiliary features (A$^2$F) for SISR. Firstly, to explore the features from the bottom layers, the auxiliary feature from all the previous layers are projected into a common space. Then, to better utilize these projected auxiliary features and filter the redundant information, the channel attention is employed to select the most important common feature based on current layer feature. We incorporate these two modules into a block and implement it with a lightweight network. Experimental results on large-scale dataset demonstrate the effectiveness of the proposed algorithm against the state-of-the-art (SOTA) SR methods. Notably, when parameters are less than 320k, A$^2$F outperforms the SOTA methods for all scales, which proves its ability to better utilize the auxiliary features.

SlidesLive

Similar Papers

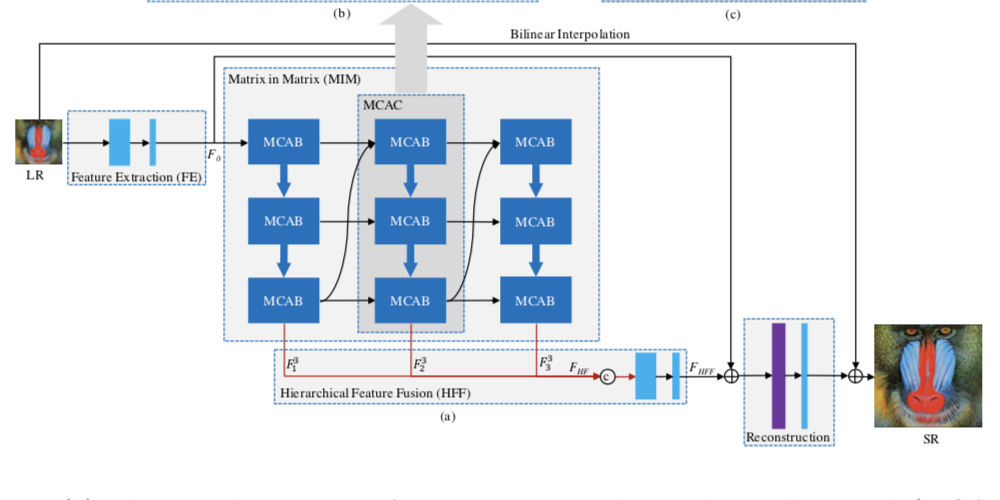

Accurate and Efficient Single Image Super-Resolution with Matrix Channel Attention Network

Hailong Ma (Xiaomi), Xiangxiang Chu (Xiaomi), Bo Zhang (Xiaomi)*

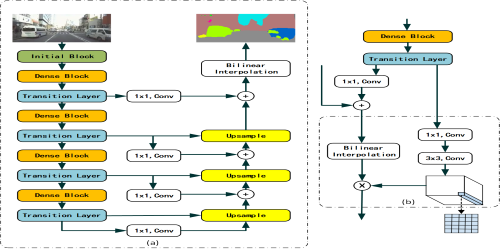

Dense Dual-Path Network for Real-time Semantic Segmentation

Xinneng Yang (Tongji University)*, Yan Wu (Tongji University), Junqiao Zhao (Tongji University), Feilin Liu (Tongji University)

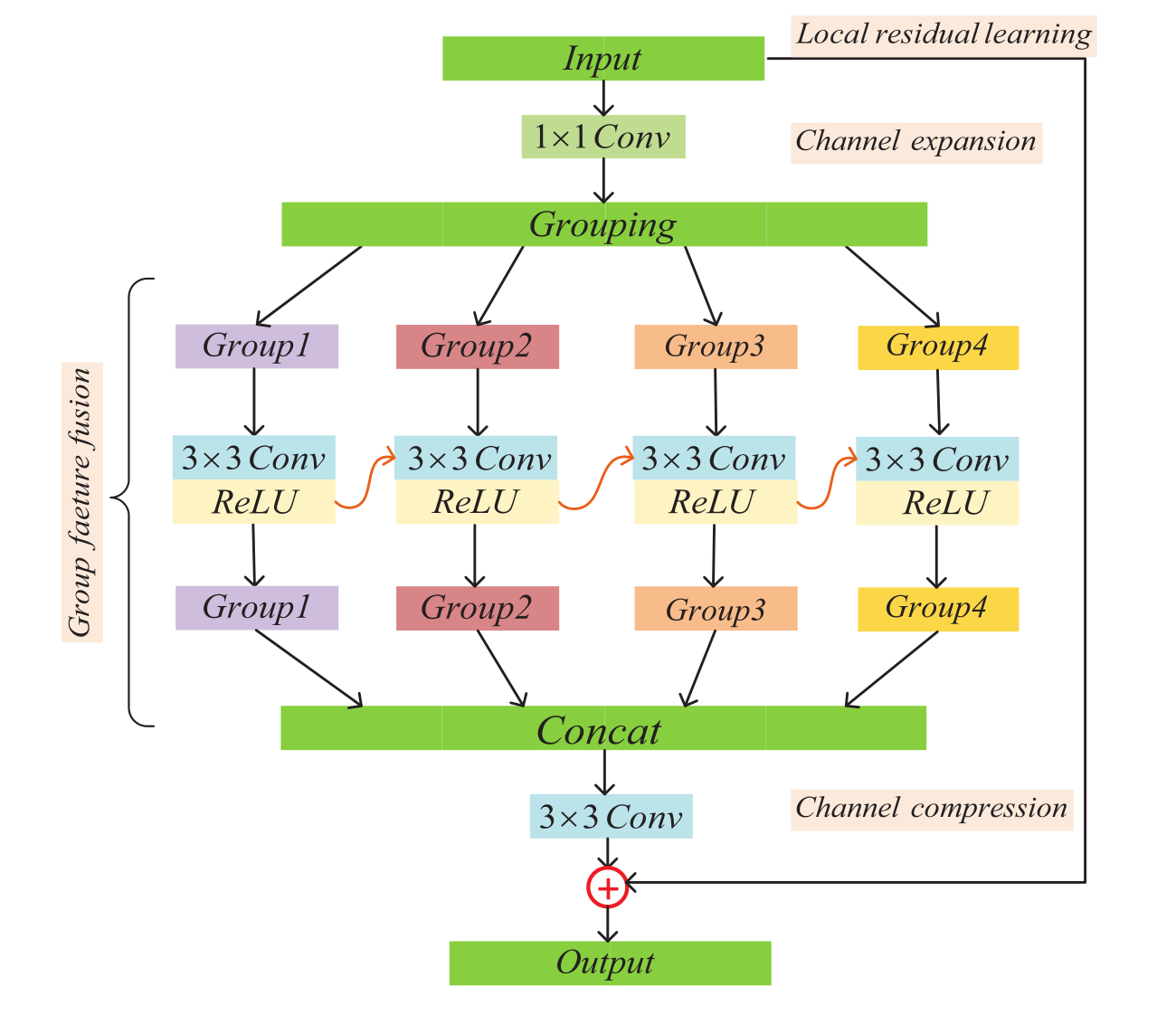

An Efficient Group Feature Fusion Residual Network for Image Super-Resolution

Pengcheng Lei (University of Shanghai for Science and Technology), Cong Liu (University of Shanghai for Science and Technology)*