Attention-Aware Feature Aggregation for Real-time Stereo Matching on Edge Devices

Jia-Ren Chang (National Chiao Tung University, aetherAI), Pei-Chun Chang (National Chiao Tung University), Yong-Sheng Chen (National Chiao Tung University)*

Keywords: 3D Computer Vision

Abstract:

Recent works have demonstrated superior results for depth estimation from a stereo pair of images using convolutional neural networks. However, these methods require large amounts of computational resources and are not suited to real-time applications on edge devices. In this work, we propose a novel method for real-time stereo matching on edge devices, which consists of an efficient backbone for feature extraction, an attention-aware feature aggregation, and a cascaded 3D CNN architecture for multi-scale disparity estimation. The efficient backbone is designed to generate multi-scale feature maps with constrained computational power. The multi-scale feature maps are further adaptively aggregated via the proposed attention-aware feature aggregation module to improve representational capacity of features. Multi-scale cost volumes are constructed using aggregated feature maps and regularized using a cascaded 3D CNN architecture to estimate disparity maps in anytime settings. The network infers a disparity map at low resolution and then progressively refines the disparity maps at higher resolutions by calculating the disparity residuals. Because of the efficient extraction and aggregation of informative features, the proposed method can achieve accurate depth estimation in real-time inference. Experimental results demonstrated that the proposed method processed stereo image pairs with resolution 1242x375 at 12-33 fps on an NVIDIA Jetson TX2 module and achieved competitive accuracy in depth estimation. The code is available at https://github.com/JiaRenChang/RealtimeStereo .

SlidesLive

Similar Papers

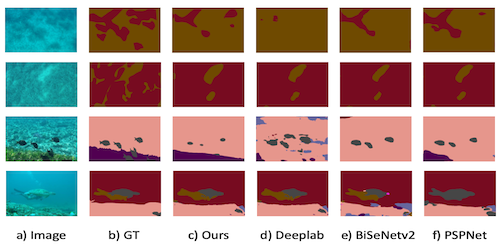

Compact and Fast Underwater Segmentation Network for Autonomous Underwater Vehicles

Jiangtao Wang (Loughborough University), Baihua Li (Loughborough University)*, Yang Zhou (Loughborough University), Emanuele Rocco (Witted Srl), Qinggang Meng (Computer Science Department Loughborough University)

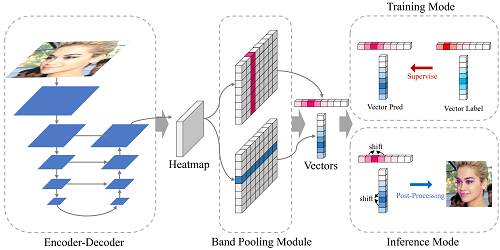

Gaussian Vector: An Efficient Solution for Facial Landmark Detection

Yilin Xiong (Central South University)*, Zijian Zhou (Horizon), yuhao dou (Horizon), ZHIZHONG SU (Horizon Robotics)

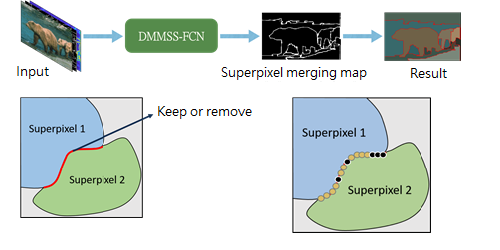

Generic Image Segmentation in Fully Convolutional Networks by Superpixel Merging Map

Jin-Yu Huang (National Taiwan University), Jian-Jiun Ding (National Taiwan University)*