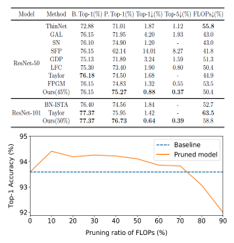

Towards Optimal Filter Pruning with Balanced Performance and Pruning Speed

Dong Li (Nuctech)*, Sitong Chen (Nuctech), Xudong Liu (Nuctech), Yunda Sun (Nuctech), Li Zhang (Nuctech)

Keywords: Deep Learning for Computer Vision

Abstract:

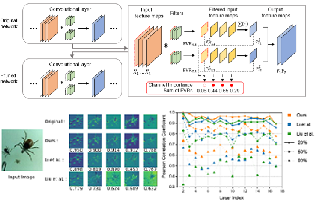

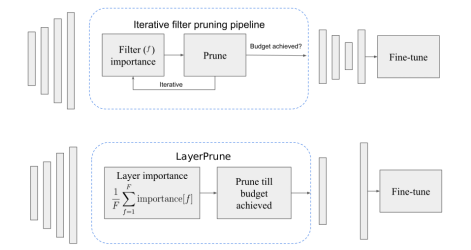

Filter pruning has drawn more attention since resource constrained platform requires more compact model for deployment. However, current pruning methods suffer either from the inferior performance of one-shot methods, or the expensive time cost of iterative training methods. In this paper, we propose a balanced filter pruning method for both performance and pruning speed. Based on the filter importance criteria, our method is able to prune a layer with approximate layer-wise optimal pruning rate at preset loss variation. The network is pruned in the layer-wise way without the time consuming prune-retrain iteration. If a pre-defined pruning rate for the entire network is given, we also introduce a method to find the corresponding loss variation threshold with fast converging speed. Moreover, we propose the layer group pruning and channel selection mechanism for channel alignment in network with short connections. The proposed pruning method is widely applicable to common architectures and does not involve any additional training except the final fine-tuning. Comprehensive experiments show that our method outperforms many state-of-the-art approaches.

SlidesLive

Similar Papers

Feature Variance Ratio-Guided Channel Pruning for Deep Convolutional Network Acceleration

Junjie He (Zhejiang University)*, Bohua Chen (Zhejiang University), Yinzhang Ding (Zhejiang University), Dongxiao Li (Zhejiang University)

To filter prune, or to layer prune, that is the question

Sara Elkerdawy (University of Alberta)*, Mostafa Elhoushi (Huawei Technologies), Abhineet Singh (University of Alberta), Hong Zhang (University of Alberta), Nilanjan Ray (University of Alberta)

Channel Pruning for Accelerating Convolutional Neural Networks via Wasserstein Metric

Haoran Duan (University of Science and Technology of China (USTC))*, Hui Li (University of Science and Technology of China (USTC))