Bridging Adversarial and Statistical Domain Transfer via Spectral Adaptation Networks

Christoph Raab (FHWS)*, Philipp Väth (FHWS), Peter Meier (FHWS), Frank-Michael Schleif (FHWS)

Keywords: Applications of Computer Vision, Vision for X; Statistical Methods and Learning

Abstract:

Statistical and adversarial adaptation are currently two extensive categories of neural network architectures in unsupervised deep domain adaptation. The latter has become the new standard due to its good theoretical foundation and empirical performance. However, there are two shortcomings. First, recent studies show that these approaches focus too much on easily transferable features and thus neglect important discriminative information. Second, adversarial networks are challenging to train. We addressed the first issue by the alignment of transferable spectral properties within an adversarial model to balance the focus between the easily transferable features and the necessary discriminatory features, while at the same time limiting the learning of domain-specific semantics by relevance considerations.Second, we stabilized the discriminator networks training procedure by Spectral Normalization employing the Lipschitz continuous gradients.We provide a theoretical and empirical evaluation of our improved approach and show its effectiveness in a performance study on standard benchmark data sets against various other state of the art methods.

SlidesLive

Similar Papers

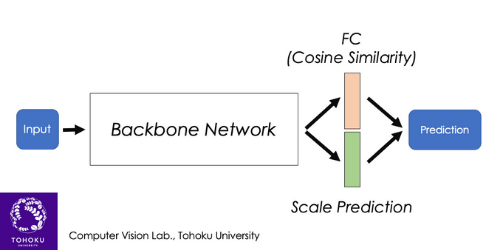

Hyperparameter-Free Out-of-Distribution Detection Using Cosine Similarity

Engkarat Techapanurak (Tohoku University)*, Masanori Suganuma (RIKEN AIP / Tohoku University), Takayuki Okatani (Tohoku University/RIKEN AIP)

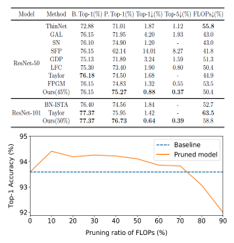

Channel Pruning for Accelerating Convolutional Neural Networks via Wasserstein Metric

Haoran Duan (University of Science and Technology of China (USTC))*, Hui Li (University of Science and Technology of China (USTC))

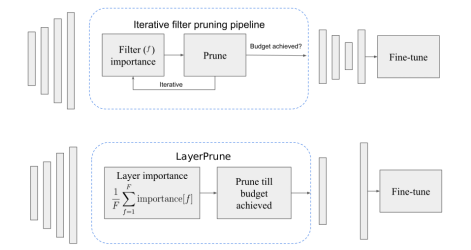

To filter prune, or to layer prune, that is the question

Sara Elkerdawy (University of Alberta)*, Mostafa Elhoushi (Huawei Technologies), Abhineet Singh (University of Alberta), Hong Zhang (University of Alberta), Nilanjan Ray (University of Alberta)