Abstract:

Previous work on novel object detection considers zero or few-shot settings where none or few examples of each category are available for training. In real world scenarios, it is less practical to expect that 'all' the novel classes are either unseen or have few-examples. Here, we propose a more realistic setting termed 'Any-shot detection', where totally unseen and few-shot categories can simultaneously co-occur during inference. Any-shot detection offers unique challenges compared to conventional novel object detection such as, a high imbalance between unseen, few-shot and seen object classes, susceptibility to forget base-training while learning novel classes and distinguishing novel classes from the background. To address these challenges, we propose a unified any-shot detection model, that can concurrently learn to detect both zero-shot and few-shot object classes. Our core idea is to use class semantics as prototypes for object detection, a formulation that naturally minimizes knowledge forgetting and mitigates the class-imbalance in the label space. Besides, we propose a rebalanced loss function that emphasizes difficult few-shot cases but avoids overfitting on the novel classes to allow detection of totally unseen classes. Without bells and whistles, our framework can also be used solely for Zero-shot object detection and Few-shot object detection tasks. We report extensive experiments on Pascal VOC and MS-COCO datasets where our approach is shown to provide significant improvements.

SlidesLive

Similar Papers

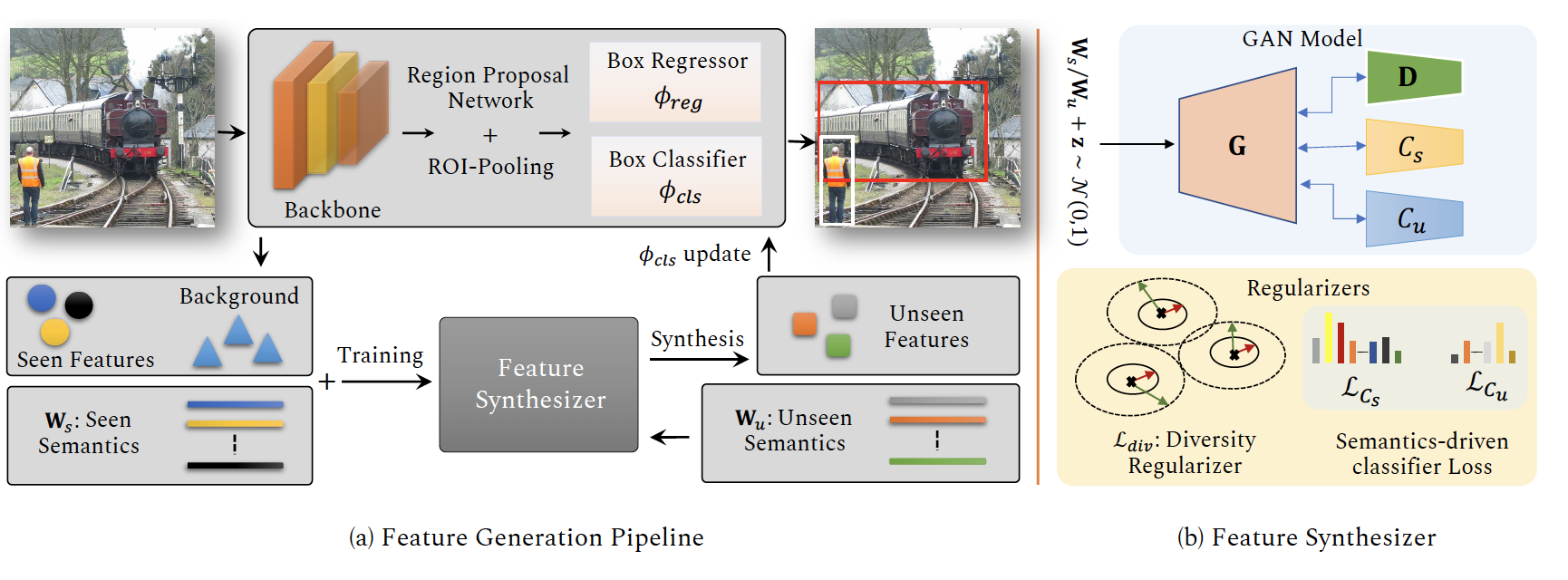

Synthesizing the Unseen for Zero-shot Object Detection

Nasir Hayat (IIAI), Munawar Hayat (IIAI)*, Shafin Rahman (North South University), Salman Khan (Australian National University (ANU)), Syed Waqas Zamir (IIAI), Fahad Shahbaz Khan (Inception Institute of Artificial Intelligence)

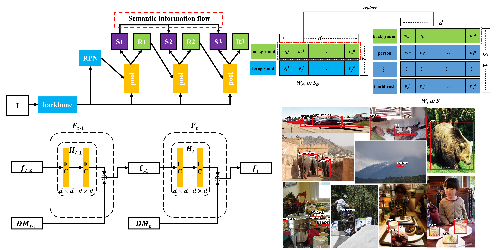

Background Learnable Cascade for Zero-Shot Object Detection

Ye Zheng (Institute of Computing Technology, Chinese Academy of Sciences)*, Ruoran Huang (Institute of Computing Technology, Chinese Academy of Sciences), Chuanqi Han (Institute of Computing Technology, Chinese Academy of Sciences), Xi Huang (Institute of computing technology of the Chinese Academy of Sciences), Li Cui ( Institute of computing technology of the Chinese Academy of Sciences)

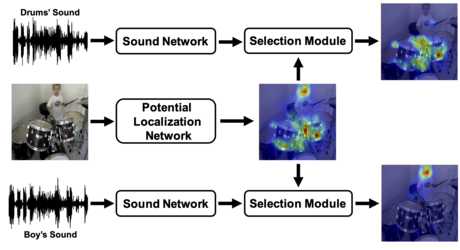

Do We Need Sound for Sound Source Localization?

Takashi Oya (Waseda University)*, Shohei Iwase (Waseda University ), Ryota Natsume (Waseda University), Takahiro Itazuri (Waseda University), Shugo Yamaguchi (Waseda University), Shigeo Morishima (Waseda Research Institute for Science and Engineering)