Synthetic-to-Real Unsupervised Domain Adaptation for Scene Text Detection in the Wild

weijia wu (Zhejiang University)*, Ning Lu (Tencent Cloud Product Department), Enze Xie (The University of Hong Kong), Yuxing Wang (Zhejiang University), Wenwen Yu (Xuzhou Medical University), Cheng Yang (Zhejiang University), HONG ZHOU (Zhejiang University)

Keywords: Recognition: Feature Detection, Indexing, Matching, and Shape Representation

Abstract:

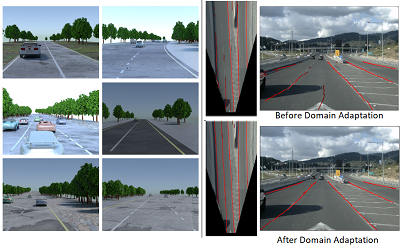

Deep learning-based scene text detection can achieve preferable performance, powered with sufficient labeled training data. However, manual labeling is time consuming and laborious. At the extreme, the corresponding annotated data are unavailable. Exploiting synthetic data is a very promising solution except for domain distribution mismatches between synthetic datasets and real datasets. To address the severe domain distribution mismatch, we propose a synthetic-to-real domain adaptation method for scene text detection, which transfers knowledge from synthetic data (source domain) to real data (target domain). In this paper, a text self-training (TST) method and adversarial text instance alignment (ATA) for domain adaptive scene text detection are introduced. ATA helps the network learn domain-invariant features by training a domain classifier in an adversarial manner. TST diminishes the adverse effects of false positives(FPs) and false negatives(FNs) from inaccurate pseudo-labels. Two components have positive effects on improving the performance of scene text detectors when adapting from synthetic-to-real scenes. We evaluate the proposed method by transferring from SynthText, VISD to ICDAR2015, ICDAR2013. The results demonstrate the effectiveness of the proposed method with up to 10% improvement, which has important exploration significance for domain adaptive scene text detection.

SlidesLive

Similar Papers

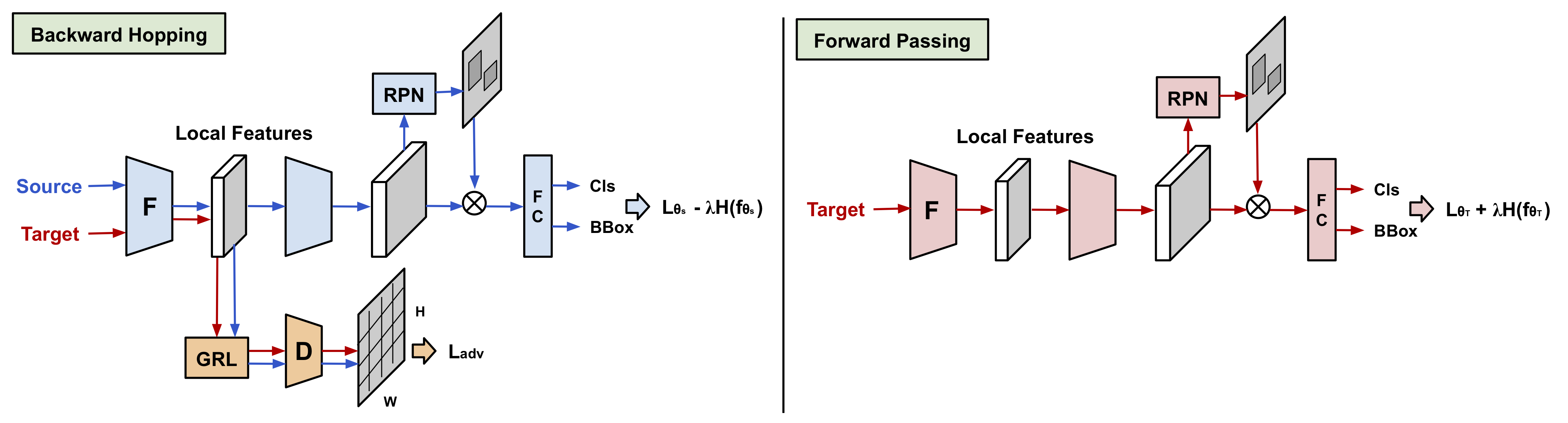

Unsupervised Domain Adaptive Object Detection using Forward-Backward Cyclic Adaptation

Siqi Yang (University of Queensland)*, Lin Wu (University of Queensland), Arnold Wiliem (the University of Queensland), Brian C. Lovell (University of Queensland)

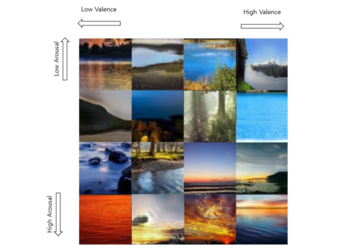

Emotional Landscape Image Generation Using Generative Adversarial Networks

Chanjong Park (Yonsei University), In-Kwon Lee (Yonsei University)*

Synthetic-to-real domain adaptation for lane detection

Noa Garnett (GM), Roy Uziel (Ben-Gurion University), Netalee Efrat (General Motors), Dan Levi (General Motors)*